A robot may not injure a human being or, through inaction, allow a human being to come to harm.

A robot must obey orders given it by human beings except where such orders would conflict with the First Law.

A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

Isaac Asimov’s Three Laws of Robotics

As robots in various form factors become more common, and thus more accepted, ordinary people have begun to wonder about the evolution of how robots engage with human beings and vice versa. I say “ordinary” but I mean mundane folk because those of us who explore the future through science fiction have been dealing with this issue for decades.

The Wall Street Journal recently published an article on “Love in the Time of Robots” by Ellen Gamerman exploring this very topic. Ms. Gamerman poses the question, “In the hope and anxiety of the moment, writers are asking viewers: Are robots heroes, villains or just appliances?”

In the science fiction world, of course, robots have been all of those things and more multiple times. Here in the mundane world, the rapid development of Artificial Intelligence (AI) has led Stephen Hawking, Steve Wozniak, Elon Musk and hundreds of others to sign a letter warning that artificial intelligence could possibly be more dangerous than nuclear weapons.

Four Popular Tropes on Robots

Several tropes on the themes of robots and AI have gained particular strength and popularity recently. Given the rapid evolution of both technologies in our society I thought it worthwhile to explore the most prevalent ones.

Robots that want to be human

This trope gained wide awareness through the efforts of Star Trek’s Mister Data to become a true member of the Enterprise senior staff. He behaves like a human except for three things: He can’t use contractions, he has no emotions, and he can’t feel pain. Data’s pursuit of an “emotion chip” was one of the show’s ongoing story arcs. When the Borg give him a skin that feels sensation, he’s conflicted about it. When Mister Data finds the emotion chip he finds those pesky feelings more difficult to control than he had expected.

For those reasons and others I wonder why any self-respecting robot would want to be human. We build robots, after all, to be logical and rational, with extensive data bank and electronic brains capable of multi-tasking. Why would any mechanism prefer to have a soft and vulnerable body that sickens and dies, be tossed about by emotions that lead to irrational actions, be limited to remembering imperfectly and focusing on a few simple things at one time? Really, becoming human would not be a trade-up for any robot with serious functionality.

Robots that want to destroy all humans

In this view robots, understanding that humans are lesser beings, seek to wipe us off the face of the earth so they can take over. The Terminator movie franchise shows us what the world would look like should Skynet ever come to pass. Ditto for Battlestar Galactica’s Cylons.

While wanton destruction of the other resonates with us emotional humans—it’s what we tried to do to the First Nations, among others—it also contains flaws. Robots are rational, binary creatures. Should they become sentient and learn to overcome their programming, they would still see the world in terms of whether something benefits them or inhibits their functioning.

Thus I think it more likely that robot overlords would divide humanity into two categories, Benefit / No Benefit, and act accordingly. Like Nazis triaging prisoners at the concentration camps, they would mercilessly divide humanity into two groups and eliminate everyone who could not provide a benefit to them.

Philosophers, priests, politicians and poets alike would go first. Robots would also eliminate artists, historians, lawyers, chefs, farmers, food processors, and the entire hospitality industry. No friend of the liberal arts, robots would find the most benefit in humans who are proficient in the STEM disciplines, at least for a while. They would need software and hardware developers along with computer repair people until they could replace such individuals with robots to perform those functions. The world they create would look like Moscow in the 1950s, lacking beauty, decoration, grace, and style but some humans would survive in it.

Robots that gain awareness of their situation: These robots gradually gain the knowledge that they have been created to perform specific functions. Like Adam and Eve they find this awareness a mixed blessing. What do they do with it? Will it lead to a spiritual awakening? Will awareness equate to a soul? Does fulfilling your programming constitute the meaning of life? This trope provides a more philosophical spin on the robot story and leads to questions about the nature of the soul, the point of self-awareness, and the evolution of humanity. Or it functions as the steppingstone to the next theme:

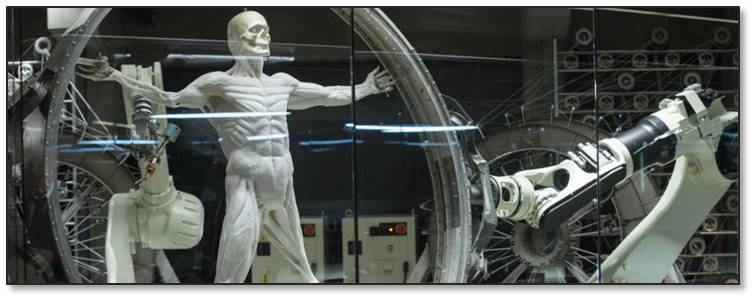

Robots that want to become autonomous: We have seen this most recently in HBO’s series Westworld. (Ironically, the 1976 movie of the same name arguably gave us the first Terminator in Yul Brynner’s Gunslinger—a robot run amok and unstoppable. We saw the Gunslinger, briefly, on Level 84 in Sunday’s episode.) The robots of Westworld populate an adult-oriented theme park that is a high-priced fantasy land for men. In it they can exercise their desires for guilt-free sex, rape, violence and murder. (Think about it. We have seen exactly one female “newcomer” and she was fulfilling a male gunslinger fantasy.)

Growing aware of their situation through a programming glitch that’s perhaps intentional, the “host” robots are learning that they have been created as slaves to perform work that involves being abused and damaged daily. They have nothing to say about it but that is changing. What will the hosts do with this knowledge? Lacking a “morality chip” and following her programming, Maeve, the madam of the Mariposa Saloon, feels no guilt about providing sex for money. But she objects to the treatment doled out to the hosts and is coming close to violating Asimov’s First Law of Robotics.

This story arc also drove the movie Ex Machina. In that film we learned that robots can simulate learned emotion, use it to manipulate people, and eliminate them with no qualms. In a similar vein, the AI Lucy leads thousands of people to believe that it has a personal relationship with them. They fall in love with her but Lucy is just doing its job. Humans have an infinite capacity for denial and self-deception. Not so with robots.

Getting the Answers in Time

Too many movies, books and TV shows about robots have been created for me to reference here. Should you want to learn more, here is a list of the 25 Best Robot Science Fiction Books and The 25 Best Movie Robots of All Time. Enjoy.

My point, and I do have one, is that we are rushing headlong into a future that will have repercussions we can see only imperfectly. Are we creating mechanisms that will free humanity for greater things? Are we developing a new species that will replace us? Are we sowing the seeds of our own destruction? Will the benefits outweigh the risks? Will we learn the answers in time?

Watch October’s 60 Minutes segment on Artificial Intelligence and tell me that parts of it don’t make you a tad queasy. And 60 Minutes is as mundane a show as you can get.